Bar Regulators Deploy AI to Police Attorney Misconduct

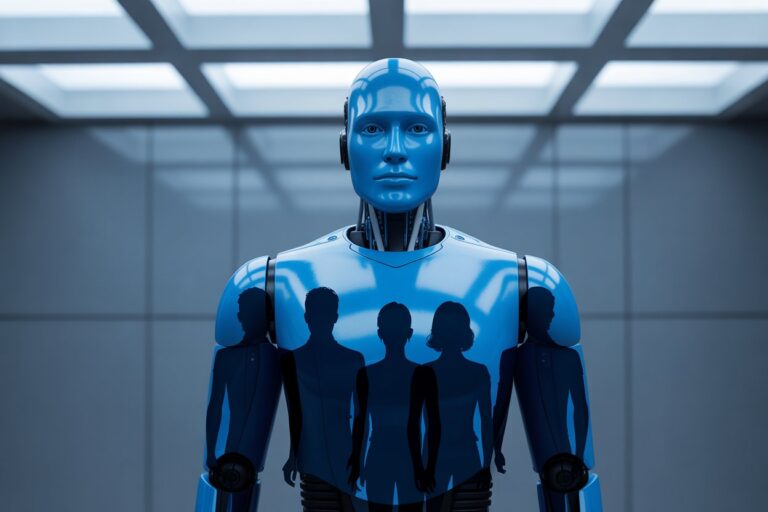

Artificial intelligence has entered the back offices of professional discipline. From complaint intake to data analysis, ethics investigators are testing whether algorithms can identify misconduct faster than human reviewers. The shift marks a quiet but consequential turn in legal governance: the same technology that complicates professional ethics is now being used to enforce it.

Automation Meets Accountability

Bar regulators and oversight agencies have begun experimenting with AI to manage workloads that once relied entirely on human judgment. Case-matching tools flag repetitive grievances, plagiarism detectors sweep filings for copied text, and statistical models track patterns that may indicate systemic neglect. The aim is efficiency, but it also raises questions about fairness, transparency, and the preservation of professional discretion.

As oversight bodies automate parts of the investigative process, a new legal frontier emerges: how far machine-assisted supervision can go without compromising due process. The answer is shaping the future of professional accountability, redefining what it means for human ethics officials to stay in charge when machines are doing the watching.

Most U.S. legal ethics enforcement still depends on manual review. Staff attorneys read complaints, interview witnesses, and recommend discipline to committees. But mounting caseloads and limited budgets have pushed regulators to explore automation. According to reports published by the American Bar Association and state bar associations, several offices now use AI-driven systems for early case screening and document organization. These tools can identify similar fact patterns, highlight duplicate allegations, and flag filings that mirror prior misconduct.

The ABA’s Formal Opinion 512 sets the national tone. It requires lawyers to supervise AI outputs and verify all work product before relying on it. While directed at practitioners, the opinion indirectly applies to investigators who use AI in disciplinary work. Human oversight, it emphasizes, cannot be delegated to a machine. That principle has quickly become the cornerstone of responsible AI deployment inside professional regulation itself.

Ethics Oversight in the Age of Data

Artificial intelligence allows regulators to analyze volumes of data previously inaccessible. Complaint databases, audit reports, and filings contain patterns that may indicate emerging risks: repeated billing irregularities, template errors across multiple clients, or clusters of misconduct linked to certain practice areas. AI tools can flag these anomalies in seconds. What they cannot do is interpret them with the nuance required for fairness.

Legal scholars have warned that predictive analytics may blur the line between investigation and surveillance. If a system identifies “high-risk” practitioners based on prior complaints, it risks reinforcing historical bias. The Federal Trade Commission has already cautioned that such applications must meet standards of transparency and verifiability. Under Section 5 of the FTC Act, overstating an algorithm’s accuracy or using opaque decision logic can be considered an unfair practice. In the context of legal oversight, that means an algorithmic flag can never substitute for documented human reasoning.

Confidentiality and Due Process

Bar complaints often include privileged or highly sensitive information. When those records are processed by external or automated systems, regulators must consider confidentiality obligations under Model Rule 1.6. Most bars now prohibit uploading disciplinary files to consumer-grade AI tools. Instead, they rely on closed systems subject to internal auditing. Even so, questions persist about data retention, training exposure, and access controls. An inadvertent disclosure could compromise not only an investigation but also public trust in the disciplinary process itself.

Due process presents an equally pressing concern. If a complaint is screened by algorithm before a human sees it, respondents may not know how that system influenced the decision to investigate. Unlike court proceedings, disciplinary processes often lack formal discovery mechanisms. Transparency requirements drawn from federal AI governance standards, such as the Office of Management and Budget’s M-24-10 memorandum, are beginning to inform bar policy. The memo instructs federal agencies to document algorithmic use, human oversight, and explainability. State-level ethics regulators are quietly adapting similar expectations.

The Expanding Role of AI Governance

As disciplinary agencies modernize their case systems, AI governance frameworks offer guidance. The NIST AI Risk Management Framework outlines documentation, testing, and traceability practices that mirror what bar authorities now seek to implement. Maintaining impact assessments, logging decisions, and verifying datasets align with professional responsibility principles. Several state bar task forces have cited NIST’s framework as a model for “explainable oversight” — the idea that every automated flag should be auditable by a human reviewer.

Colorado’s Artificial Intelligence Act (SB 24-205) reinforces that approach. Although directed at developers and deployers of high-risk systems, its core requirement to maintain detailed documentation and provide regulators with access upon request parallels the recordkeeping standards already familiar to ethics investigators. Transparency, once a compliance concept for industry, is becoming a procedural expectation inside professional regulation.

Balancing Efficiency with Judgment

The efficiency appeal of AI in ethics enforcement is clear. Complaint backlogs delay resolution and erode confidence. Automation can route cases faster and free staff for substantive review. Yet each improvement in speed introduces tension with the deliberative nature of ethics work. Professional discipline is not a numbers game; it is an exercise in fairness. The accuracy of a statistical model cannot replace the contextual understanding of misconduct that develops only through human reasoning.

Legal ethicists caution that overreliance on pattern recognition risks moral outsourcing. The State v. Loomis decision remains a reference point. Although it involved sentencing algorithms rather than bar oversight, the Wisconsin Supreme Court’s warning applies broadly: when a system influences judgment but its logic is opaque, procedural fairness suffers. That precedent now guides how regulators assess AI’s role in internal investigations, viewing it as useful for triage but inappropriate for final determination.

Oversight of the Oversight

Bar regulators are beginning to draft their own internal AI policies. Several have adopted disclosure requirements for any use of algorithmic tools in investigative workflows. These policies often require that outputs be reviewed by at least one licensed attorney and that all model-assisted recommendations be recorded in the case file. The approach mirrors the disclosure standards in judicial AI orders and builds on a broader federal pattern toward auditable automation.

Internationally, similar trends are emerging. The U.K. Solicitors Regulation Authority advises that technology in supervision must enhance, not replace, professional reasoning. Canada’s Law Society of Alberta and the Council of Europe Framework Convention on Artificial Intelligence both emphasize transparency and human accountability. Their experiences have influenced how U.S. bodies frame their own governance principles — not through direct adoption but through cautious comparison. The message is consistent: algorithms may assist oversight, but they cannot embody judgment.

The Human Element Remains the Standard

Even the most advanced oversight systems rely on human final review. The role of AI remains administrative, focused on flagging, sorting, and correlating information. Investigators, disciplinary counsel, and ethics boards still determine what those signals mean. That distinction is critical for preserving confidence in self-regulation. Without it, the profession risks delegating its conscience to code.

For now, regulators view AI as an efficiency tool rather than a decision-maker. The legal profession’s core principles of independence, confidentiality, and fairness continue to depend on human understanding. Automation may sharpen detection, but interpretation still requires the one quality machines cannot simulate: judgment informed by context. In that respect, AI has not transformed ethics oversight as much as it has clarified why the human element must remain at its center.

Sources

- American Bar Association: “Formal Opinion 512 on Generative AI Tools” (July 29, 2024)

- Colorado General Assembly: SB 24-205 “Artificial Intelligence Act” (2024)

- Council of Europe: “Framework Convention on Artificial Intelligence and Human Rights, Democracy and the Rule of Law” (May 2024)

- Federal Trade Commission Act, Section 5 (15 U.S.C. §45)

- Florida Bar: Opinion 24-1 (2024)

- Justia: State v. Loomis, Wisconsin Supreme Court (2016)

- Law Society of Alberta: “The Generative AI Playbook” (2024)

- NIST: “AI Risk Management Framework” (2023)

- North Carolina State Bar: “Formal Ethics opinion 1: Use of Artificial Intelligence in Law Practice” (2024)

- Solicitors Regulation Authority (U.K.): “Artificial Intelligence in the Legal Market” (2021)

- White House: Office of Management and Budget: Memorandum M-24-10 “Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence” (March 28, 2024)

This article was prepared for educational and informational purposes only. It does not constitute legal advice and should not be relied upon as such. All cases, statutes, and sources cited are publicly available through official publications and reputable outlets. Readers should consult professional counsel for specific legal or compliance questions related to AI use.

See also: Oregon Becomes Testing Ground for AI Ethics Rules as Fabricated Case Law Spreads

Jon Dykstra, LL.B., MBA, is a legal AI strategist and founder of Jurvantis.ai. He is a former practicing attorney who specializes in researching and writing about AI in law and its implementation for law firms. He helps lawyers navigate the rapid evolution of artificial intelligence in legal practice through essays, tool evaluation, strategic consulting, and full-scale A-to-Z custom implementation.