AI Systems Increasingly Control Who Reaches Immigration Court in the United States

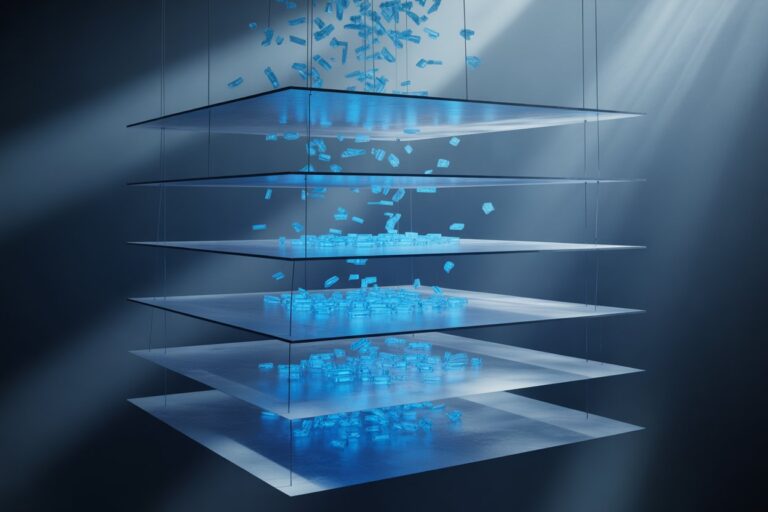

In U.S. immigration law, the right to stay often turns on credibility, paper trails, and split-second judgments in overburdened systems. Increasingly, those judgments are primed by opaque models that rank travelers as high risk, flag asylum claims as suspicious, or predict which lawful permanent residents are likely to naturalize. For lawyers, AI in immigration adjudication is no longer a speculative threat. It is a growing layer of invisible gatekeeping that reshapes who is interviewed, who is detained, and who ever reaches a courtroom.

Where AI Sits In The Immigration Pipeline

Immigration adjudication now runs through a dense web of AI-assisted systems that start operating long before a person ever stands before an immigration judge. At the border, Customs and Border Protection relies on longstanding tools such as the Automated Targeting System (ATS), which mines passenger and cargo data to assign risk scores. CBP has also rapidly expanded facial recognition technology deployments at land borders, airports, and pre-clearance locations, with biometric face-matching now required for international travelers at most major U.S. ports of entry. Civil liberties groups have documented how algorithmic risk scores can follow travelers for decades, with limited ability to inspect or challenge the underlying logic.

Alongside ATS, DHS is in the middle of replacing its legacy IDENT biometric database with the Homeland Advanced Recognition Technology (HART) system, a cloud-based platform designed to store and process massive volumes of facial images, fingerprints, iris scans, and linked biographic data. Government auditors and advocates have warned that HART’s scale, long retention periods, and information-sharing arrangements risk embedding inaccuracies and bias deep inside enforcement and adjudication workflows.

On the benefits and relief side, analysis by the American Immigration Council found that the 2024 DHS Artificial Intelligence Use Case Inventory listed 105 active DHS AI use cases at immigration components alone, including 59 at CBP, 23 at ICE, and 18 at USCIS. More than 60 percent of these use cases fall under the category Law and Justice. The inventory, available through the DHS AI Use Case portal, includes tools for biometric identification, social media screening, fraud detection, and investigative support.

Separate reporting by advocacy groups and researchers has filled in details that the inventory treats in broad strokes. Research and coalition letters on DHS automation describe USCIS tools such as “Predicted to Naturalize,” which uses machine learning to help identify which lawful permanent residents are likely to become citizens, and “Asylum Text Analytics,” which screens asylum and withholding applications for signs of supposed plagiarism-based fraud. Those systems do not formally replace the adjudicator’s signature on a decision notice, but they shape which files are elevated as risks, which are marked routine, and which are treated as suspect from the start.

From Screening to Adjudication

For clients, the most immediate impact of AI is often invisible. A traveler might never know that ATS flagged their passenger record as high risk, prompting secondary inspection and questioning that, in turn, produces notes and referrals that shape later credible-fear interviews. A family seeking parole or humanitarian protection may encounter tools that cross-check social media, prior encounters, or textual similarities with other applications before a case officer ever opens the file.

Within USCIS, AI-assisted triage and fraud detection systems can influence which cases receive extra scrutiny, which are routed for expedited review, and which trigger requests for evidence or supervisory sign-off. In enforcement, ICE risk assessment tools and analytic platforms support decisions about detention, supervision, and investigative priorities. Reports from researchers and litigants have traced how automated risk classifications and algorithmic scoring systems can put applicants and respondents in higher-risk categories, even when the underlying data is incomplete or out of date.

None of this means that a single model “decides” a person’s fate in the way that a statute or judgment order does. It does mean that AI is increasingly the first actor in the chain that leads to those outcomes. That placement at the intake, screening, and triage stages creates legal questions that cut across immigration courts, agency adjudications, and federal judicial review.

Due Process Meets Algorithmic Scoring

Immigration law already operates under constrained procedural protections. The Supreme Court has treated removal proceedings as civil rather than criminal, which limits the scope of constitutional safeguards that apply. Administrative decisions about benefits, parole, and discretionary relief often receive even thinner review. Into that framework, AI inserts a new layer of opacity at precisely the point where due process depends on notice, an opportunity to be heard, and a neutral decision-maker.

Classic due process doctrine requires that applicants and respondents understand the basis of adverse decisions well enough to contest them. In practice, AI-assisted systems often operate through secret scoring rules, proprietary algorithms, or models that government agencies describe in general terms without disclosing parameters or training data. The DHS AI inventory may confirm that an AI tool exists, but it rarely explains how much weight its output carries in a specific adjudication, how error rates are measured, or what safeguards exist against over-reliance.

Advocates and scholars are already connecting those gaps to concrete legal theories. Litigation by groups such as Refugees International and the Harvard Immigration and Refugee Clinical Program has invoked the Freedom of Information Act to obtain records about USCIS use of AI in asylum processing, arguing that transparency about model performance and error rates is essential to enforcing asylum obligations. In October 2024, three immigration organizations filed suit against DHS in the U.S. District Court for the District of Columbia seeking AI impact assessments and documentation of accuracy and bias testing. The case remains in the initial stages, with plaintiffs seeking to compel production of records under the Freedom of Information Act. Elsewhere, administrative law experts have begun to frame AI-assisted adjudication as a problem of reason-giving: if an immigration officer relies on a risk score or text-classification flag, that reliance must appear in the administrative record and be intelligible enough for courts to review.

Under the Administrative Procedure Act, agency actions that are arbitrary, capricious, or not supported by substantial evidence are subject to judicial review. When immigration decisions rely on algorithmic outputs that agencies cannot adequately explain or defend, those decisions become vulnerable to APA challenges. Courts have increasingly required agencies to articulate a rational connection between facts found and choices made when automated tools influence outcomes. This legal framework provides immigration practitioners with a concrete basis for challenging opaque AI systems that shape adjudications without transparency or adequate justification.

Bias, Profiling and the Risk of “Automating Deportation”

Immigration systems have long raised concerns about selective enforcement and structural bias. AI can magnify those problems by turning historical patterns into features of new decision rules. Analysis by community groups and researchers examining DHS automation argues that AI tools in the immigration system risk automating deportation by mining historical enforcement data, fraud referrals, and arrest records that already reflect racialized and nationality-based disparities.

Earlier controversies outside the United States underscore how quickly such systems can harden inequities. In the United Kingdom, judicial review and civil society pressure led the Home Office to abandon a visa streaming algorithm that funneled applicants into green, yellow, and red risk channels, after critics argued it relied heavily on nationality-based heuristics and produced discriminatory outcomes. In Canada, internal documents and testimony related to the Immigration, Refugees and Citizenship Canada (IRCC) Chinook suite of tools have prompted debates over whether automated triage and analytics preserve enough individualized assessment in temporary residence decisions, particularly for applicants from countries that already face high refusal rates.

U.S. tools raise parallel concerns. Algorithmic risk assessment and fraud analytics often start from data that is incomplete or error-prone: watchlists, prior encounters, social media posts, or textual similarities that may be benign. Research on automated risk assessment and profiling in migration shows how these inputs can lead to indirect discrimination, where facially neutral rules consistently disadvantage people from certain nationalities, ethnic groups, or language communities. For lawyers, the task is to surface those patterns in individual records and, when possible, to connect them to broader evidence from inventories, audits, and independent studies.

Global Frameworks for Migration AI

One reason AI in U.S. immigration adjudication deserves close attention is that global standards are moving toward explicit regulation of migration and asylum technologies. The European Union’s Artificial Intelligence Act, expected to apply from 2026, classifies AI systems used in asylum, migration, and border management as high risk. That label triggers requirements for documented risk management, quality data governance, human oversight, transparency, and post-market monitoring. A 2025 briefing from the European Parliamentary Research Service on AI in asylum procedures emphasizes that these systems affect people in particularly vulnerable positions and thus demand stricter safeguards than general administrative tools.

Within the Council of Europe, recent resolutions and reports on artificial intelligence and migration stress that AI-fueled border surveillance, biometric identification, and algorithmic risk scoring must be strictly necessary and proportionate, with robust protections for human dignity and non-discrimination. Research highlights the risk of creating a two-tier human-rights regime in which refugees and migrants receive systematically weaker protections than citizens when AI mediates access to territory and status.

The United Nations High Commissioner for Refugees (UNHCR) emerging AI guidance for asylum systems follows a similar logic. Its 2025 AI approach documents explore using AI for research on country-of-origin information, trend analysis, and administrative support, but warn against delegating core credibility and status determinations to models that cannot fully capture subjective fear or trauma. Regional bodies such as the European Union Agency for Asylum have published guidance on digitalization and AI in asylum and reception systems that treats human-in-the-loop design and contestability as non-negotiable.

For U.S. practitioners, these frameworks do not have direct legal force in domestic proceedings. They do, however, offer reference points when arguing that immigration AI tools implicate international human rights norms, that certain practices run counter to emerging global consensus, or that agency discretion under U.S. statutes should be exercised in a direction that avoids building structurally discriminatory technology-enabled borders.

Federal AI Governance and the NIST Framework

The NIST AI Risk Management Framework, released in January 2023, provides voluntary guidelines for managing AI risks throughout system lifecycles. The framework emphasizes trustworthiness considerations including fairness, transparency, accountability, and robustness. While NIST’s framework is not legally binding, it has become a reference standard for federal agencies developing AI governance structures.

DHS has cited the NIST framework in its own AI governance efforts, though implementation remains uneven. A January 2025 report from the DHS Office of Inspector General found that while DHS has taken steps to develop AI policies and oversight mechanisms, the department has not fully implemented its AI strategy and lacks adequate mechanisms to verify the accuracy of AI-related data. The OIG warned that without sustained improvements in AI governance, DHS risks failing to safeguard public rights and privacy.

For immigration practitioners, the NIST framework provides vocabulary and concepts for challenging AI deployments that lack adequate risk management, testing, or human oversight. Arguments that agency AI use deviates from recognized best practices carry weight when those practices are codified in widely-adopted frameworks like NIST’s.

Transparency Mechanisms: FOIA, Inventories, and Oversight

One of the most tangible changes in the last two years is that DHS now publishes detailed AI inventories in response to federal transparency requirements. The 2024 AI Use Case Inventory and subsequent updates list unclassified and non-sensitive AI use cases across components, including whether they involve facial recognition, social media analysis, or other technologies with significant civil rights implications.

Civil society organizations and researchers have started to mine those inventories and cross-reference them with other disclosures. Analyses by groups such as the Brennan Center for Justice and independent technology policy outlets note that the inventories reveal far more AI usage than previously acknowledged, but still leave key questions unanswered about training data, vendor relationships, and performance metrics. The Department’s own inspector general has raised concerns about whether DHS governance structures are adequate to ensure that AI systems comply with privacy, civil rights, and civil liberties requirements.

Letters from coalitions of more than 140 civil society groups, along with research reports on automated immigration enforcement, argue that DHS should suspend AI tools that affect life-changing decisions in immigration enforcement and adjudications unless they can meet federal standards for responsible AI. Those letters point to specific tools such as Asylum Text Analytics and Predicted to Naturalize, as well as facial recognition deployments at border crossings, as examples where the stakes for error and bias are too high to tolerate opaque or weakly governed systems.

For advocates, FOIA remains a crucial instrument. Requests and litigation have sought privacy impact assessments, accuracy evaluations, procurement records, and internal guidance on how AI outputs are integrated into workflows. Even partial disclosures can be enough to frame discovery requests, support motions in individual cases, or supply context for expert declarations on how a client’s file may have been scored or flagged.

Practical Guidance for Immigration Practitioners

For lawyers advising clients in this environment, the first step is recognizing where AI is likely to be in play. That includes international travel and admission, asylum and withholding applications, humanitarian parole, family-based and employment-based benefits, naturalization, detention and release decisions, and enforcement targeting. DHS inventories and public reporting can help identify which tools are active at CBP, ICE, and USCIS, and whether they are flagged as rights-impacting under federal guidance.

In individual cases, counsel can ask targeted questions about whether AI-assisted tools were used to screen or flag a client’s application or record. That might take the form of discovery requests in federal court, administrative inquiries, or arguments that an incomplete record frustrates meaningful review. Where records reveal that an algorithmic risk score, social media analysis, or fraud flag played a role, lawyers can draw on comparative law, human rights reports, and technical critiques to argue that heavy reliance on such tools violates due process or statutory obligations to provide individualized assessment.

Lawyers who advise institutions can also influence policy upstream. Bar associations, legal clinics, and professional organizations have begun to issue guidance on AI use in legal practice and in government decision-making. Immigration practitioners can press agencies to adopt clear governance frameworks that include impact assessments, external audits, public documentation of training data and error rates, and mechanisms for individuals to contest AI-assisted findings. Those demands align with global trends and with broader U.S. debates over AI governance in welfare, criminal justice, and financial regulation.

Most importantly, practitioners can help ensure that AI does not become an excuse to normalize thin procedures in systems that already strain the concept of due process. The core questions remain the same: Who is making the decision? On what basis? And, how can the person affected understand and challenge that choice? AI may reshape the mechanics of immigration adjudication, but it does not displace the legal and constitutional obligations that bind the agencies deploying it.

Sources

- Access Now: “Uses of AI in Migration and Border Control: A Fundamental Rights Approach to the Artificial Intelligence Act” (2022)

- American Immigration Council: “Invisible Gatekeepers: DHS’ Growing Use of AI in Immigration Decisions,” by Steven Hubbard (May 13, 2025)

- CBC News: “Immigration lawyers concerned IRCC’s use of processing technology leading to unfair visa refusals,” by Shaina Luck (2024)

- Columbia Undergraduate Law Review: “Surveillance Beyond Borders: How Courts Can Check the Power of AI Within the Immigration System,” by Ella Hummel (Dec. 2024)

- Council of Europe: “Artificial Intelligence and Migration” (2025)

- Department of Homeland Security: “Artificial Intelligence Use Case Inventory” (Dec. 16, 2024)

- Department of Homeland Security: “AI Use Case Inventory Library” (June 30, 2025)

- Department of Homeland Security, CBP: “DHS/CBP/PIA-006 Automated Targeting System (ATS) – Privacy Impact Assessment Update”

- Department of Homeland Security, OBIM: “DHS/OBIM/PIA-004 Homeland Advanced Recognition Technology System (HART) Increment 1” and 2024 PIA Update

- DHS Office of Inspector General: “DHS Has Taken Steps to Develop and Govern Artificial Intelligence, but Enhanced Oversight Is Needed” (Jan. 30, 2025)

- Electronic Frontier Foundation: “EFF & 140 Other Organizations Call for an End to AI Use in Immigration Decisions” (Sept. 5, 2024)

- European Parliamentary Research Service: “Artificial Intelligence in Asylum Procedures in the EU” (July 2025)

- European Union: EU Artificial Intelligence Act (July 12, 2024)

- Letter from Civil Society Organizations to DHS: “Cancel Use of AI Technologies for Immigration Enforcement and Adjudications by Dec. 1, 2024” (Sept. 4, 2024)

- Mijente: “Automating Deportation,” by Julie Mao, Paromita Shah, Hannah Lucal, Aly Panjwani and Jacinta Gonzalez (June 2024)

- National Institute of Standards and Technology: “AI Risk Management Framework” (Jan. 26, 2023)

- Statewatch: “UK: Threat of legal challenge forces Home Office to abandon ‘racist visa algorithm'” (Aug. 2020)

- Syracuse Law Review: “Accountability in Immigration: DHS Faces Pushback Over Rapid A.I. Expansion” (Oct. 2024)

- United Nations High Commissioner for Refugees: “Unlocking Artificial Intelligence’s Potential in COI Research” Research Paper No. 44 (Feb. 2025)

- UNHCR (The UN Refugee Agency): UNHCR AI Approach (Aug. 2025)

This article was prepared for educational and informational purposes only. It does not constitute legal advice and should not be relied upon as such. All cases, regulations, and sources cited are publicly available through court filings, government publications, and reputable media outlets. Readers should consult professional counsel for specific legal or compliance questions related to AI use.

See also: Navigating The Transparency Paradox in AI Regulation

Jon Dykstra, LL.B., MBA, is a legal AI strategist and founder of Jurvantis.ai. He is a former practicing attorney who specializes in researching and writing about AI in law and its implementation for law firms. He helps lawyers navigate the rapid evolution of artificial intelligence in legal practice through essays, tool evaluation, strategic consulting, and full-scale A-to-Z custom implementation.