Can AI Build a Legal Argument, or Only Mimic One?

Artificial intelligence can summarize a case faster than any junior associate, but can it think like one? As law firms deploy AI on real fact patterns, the critical test begins: do these tools enhance legal reasoning, or simply automate its surface? The answer reveals both breakthrough and limitation.

Testing AI on Real-World Legal Fact Patterns

Law has always been the art of turning facts into arguments. In 2025, researchers began testing whether AI could do the same. A randomized controlled trial published by Governance AI found that reasoning-enabled models using retrieval-augmented generation (RAG) produced higher-quality legal memoranda than standard chatbots. They were better at organizing facts, identifying causes of action, and citing relevant precedent. Yet they still struggled with applying rules to nuanced human conduct and assessing evidentiary sufficiency.

Other studies confirm the mixed record. Research on AI legal reasoning observes that while large language models can mimic IRAC structure, they often substitute formal logic for legal judgment. They detect issues but not strategy, weighing all facts equally rather than favoring the ones that move a judge or jury. In short, they can “sound” like a lawyer but lack the moral and rhetorical intuition that advocacy requires. AI can organize facts, identify missing elements, and suggest supporting evidence. It can also hallucinate authority, blur burdens of proof, and overlook the nuance that separates a strong argument from a merely plausible one.

From Legal Search to Structured Reasoning in AI Tools

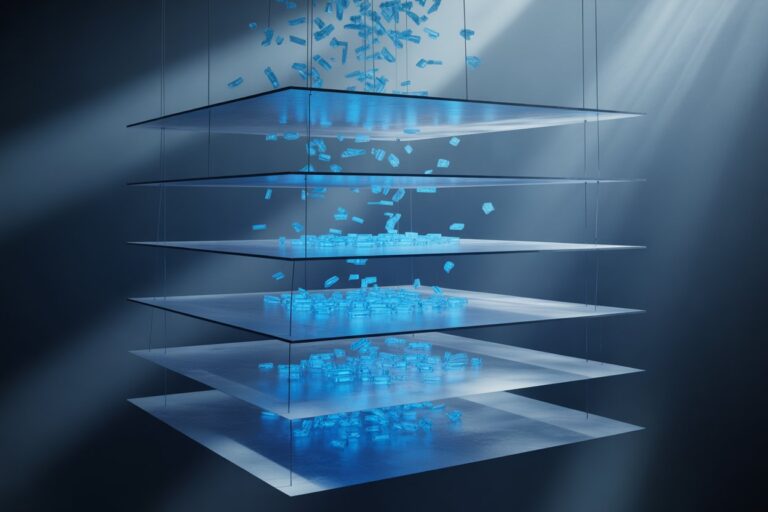

Traditional AI tools assisted with retrieval: keyword searches, case summaries, and citation mapping. Modern reasoning models attempt something more ambitious, planning before drafting. They break a problem into logical steps, retrieve relevant law, and then synthesize an argument. This process mimics the mental scaffolding of legal reasoning, though it still depends on what data the model has seen and how it interprets the prompt.

According to the Governance AI study, lawyers using reasoning-enabled systems completed drafting tasks more quickly and with modest quality gains. The models performed best when fed clear, jurisdiction-specific prompts and were least reliable when asked to infer unstated facts. They could apply the letter of the law but faltered on questions of equity, policy, or discretion, areas where human empathy still anchors judgment. The next frontier in legal technology is reasoning, not speed.

How Lawyers Can Feed Facts to AI for Better Legal Reasoning

The difference between a weak and strong AI-generated argument often begins with the input. Fact patterns that are chronological, explicit about legal issues, and transparent about both favorable and unfavorable facts yield better results. The most effective prompts direct the model to test both sides of a claim, identify evidentiary gaps, and suggest what additional proof would make a case stronger.

For example, lawyers experimenting with GPT-4-class tools found that specifying roles (“act as counsel for defendant”) and objectives (“identify three strongest defenses and necessary supporting evidence”) improved accuracy. Asking the model to rewrite the same facts from the opposing side further revealed where bias and omission entered the draft. This technique mirrors the practice of pretrial argument testing but at machine speed.

Still, presentation matters. If a prompt omits context such as jurisdictional limits, procedural posture, or relevant timelines, the AI may produce elegant nonsense. The remedy is transparency: give the model complete factual information and instruct it to separate governing law from illustrative authority. Treat it less like a research clerk and more like a witness who needs to be led.

Evidence Matters: What AI Misses and What It Can Reveal

Lawyers know that a convincing argument is only as strong as its evidence. AI, however, has no sense of admissibility. It may identify “evidence” that is irrelevant, cumulative, or barred by privilege. According to Damien Charlotin’s AI Hallucination Cases Database, which tracks court decisions where AI-generated content was found to contain fabricated citations, 509 cases have been identified as of November 2025. The problem was not malice but misunderstanding: the models could not distinguish between factual assertion and evidentiary sufficiency.

Properly guided, however, AI can still assist in evidence planning. When prompted to “list categories of proof required to establish negligence” or “identify missing documentation to substantiate damages,” models often generate structured checklists that help human lawyers organize discovery. Used this way, AI acts less as a decision-maker than as a second set of eyes that never tires of reviewing records.

Yet the risks remain systemic. AI tools can reinforce confirmation bias by overemphasizing facts favorable to the side they are told to represent. Without explicit instruction to analyze both perspectives, the model may conceal weaknesses rather than expose them. Responsible use means prompting for balance, testing adversarially, and verifying outputs through human review and procedural law.

Ethical and Professional Guardrails

For every success story, there is a cautionary tale. In Mata v. Avianca (S.D.N.Y. 2023), two attorneys were sanctioned for submitting fabricated AI-generated citations. The court held that reliance on an unverified chatbot violated Rule 11 and fundamental duties of candor. The episode has since become the case study for why verification, not automation, is the core of competence.

The American Bar Association’s Formal Opinion 512 clarifies that lawyers using generative AI must supervise and independently verify AI output. Florida has issued a formal ethics opinion emphasizing disclosure, competence, and training, while Colorado has published bar guidance and proposed rule updates on AI. Internationally, the Law Society of Alberta’s Generative AI Playbook and the U.K. Solicitors Regulation Authority’s Risk Outlook and compliance tips caution that lawyers must retain professional judgment and oversight when using AI. This duty of supervision is now being reinforced at every level of legal regulation.

For firms integrating AI into workflows, ethics now requires documentation of provenance, accuracy testing, and human oversight. Treating AI as a research partner is permissible; treating it as co-counsel is not. Competence once meant mastering precedent; it now includes understanding how machine reasoning interacts with privilege, confidentiality, and procedural fairness.

Treating AI as Research Partner, Not Co-Counsel

Firms adopting AI often discover that the technology works best when paired with human discipline. Structured templates, known as “AI reasoning playbooks,” can standardize prompts for issue spotting, legal analysis, and evidentiary mapping. These templates instruct the model to identify claims, defenses, elements, and evidence categories, while reminding the user to verify jurisdiction and citations manually.

Some firms now maintain internal “AI registers” cataloging every model used, the purpose of each, and associated risk ratings. The NIST AI Risk Management Framework and ISO/IEC 42001 offer blueprints for documenting oversight and human review. These frameworks have quickly become insurance benchmarks. Cyber-liability carriers increasingly request proof that firms conduct AI audits and maintain version logs before underwriting professional coverage.

In practice, this means aligning ethical, technical, and contractual safeguards. Vendor agreements should include audit rights, disclosure of training data sources, and indemnity for hallucinated content. Internal protocols should require that any AI-assisted argument be independently verified against primary authority. The goal is not to eliminate risk but to make it traceable.

The Horizon: From Argument Generation to Judgment Prediction

Legal reasoning benchmarks are rapidly evolving. Academic evaluations such as LegalBench test models not only on correctness but on their ability to handle complex legal reasoning tasks. Early findings suggest models can predict case outcomes with statistical accuracy yet still misidentify the moral weight of a fact pattern. Machines can simulate doctrine but not discretion.

Developers are now experimenting with “argument-chain” models that justify every conclusion with a documented premise, creating an audit trail of reasoning. In theory, these could satisfy both explainability and due process standards. In practice, they may simply formalize the biases embedded in training data. The question is no longer whether AI can argue, but whether it can argue fairly.

For law firms, the implications are immediate. As predictive systems enter litigation strategy and settlement modeling, lawyers will need to explain not just the output but the process that produced it. The client’s right to reasoned advice now extends to machine-assisted reasoning. Transparency is becoming the new standard of care.

Practical Takeaways for Law Firms Using AI

- Provide clear fact patterns, jurisdiction, and procedural posture when prompting.

- Ask AI to generate arguments for both sides and identify missing evidence.

- Verify every citation and rule application through human review.

- Document AI use and supervision to meet professional responsibility standards.

- Maintain AI registers and follow NIST or ISO oversight frameworks.

- Treat outputs as draft insights, never as final work product.

Sources

- American Bar Association: Formal Opinion 512 (July 29, 2024)

- Colorado General Assembly: Senate Bill 24-205: Consumer Protections for Artificial Intelligence (Signed May 17, 2024; Effective June 30, 2026)

- Damien Charlotin: AI Hallucination Cases Database (2023-2025)

- Florida Bar: Florida Bar Explores AI Guardrails (Aug. 15, 2025)

- Governance AI: AI-Powered Lawyering – AI Reasoning Models, Retrieval Augmented Generation, and the Future of Legal Practice (2025)

- ISO/IEC 42001: Artificial Intelligence Management System Standard (2023)

- Law Society of Alberta: Generative AI Playbook (2024)

- LegalBench: A Collaboratively Built Benchmark for Measuring Legal Reasoning in Large Language Models (2023)

- Mata v. Avianca Inc., 1:22-cv-01461 (S.D.N.Y. 2023)

- NIST AI Risk Management Framework (2023)

- U.K. Solicitors Regulation Authority: Ethics in the Use of Artificial Intelligence (2024)

This article was prepared for educational and informational purposes only. It does not constitute legal advice and should not be relied upon as such. All cases, statutes, and sources cited are publicly available through official publications and reputable outlets. Readers should consult professional counsel for specific legal or compliance questions related to AI use.

See also: Data Provenance Emerges as Legal AI’s New Standard of Care

Jon Dykstra, LL.B., MBA, is a legal AI strategist and founder of Jurvantis.ai. He is a former practicing attorney who specializes in researching and writing about AI in law and its implementation for law firms. He helps lawyers navigate the rapid evolution of artificial intelligence in legal practice through essays, tool evaluation, strategic consulting, and full-scale A-to-Z custom implementation.