Feeding the Machine: Are Law Firms Using Client Data to Train AI Without Permission?

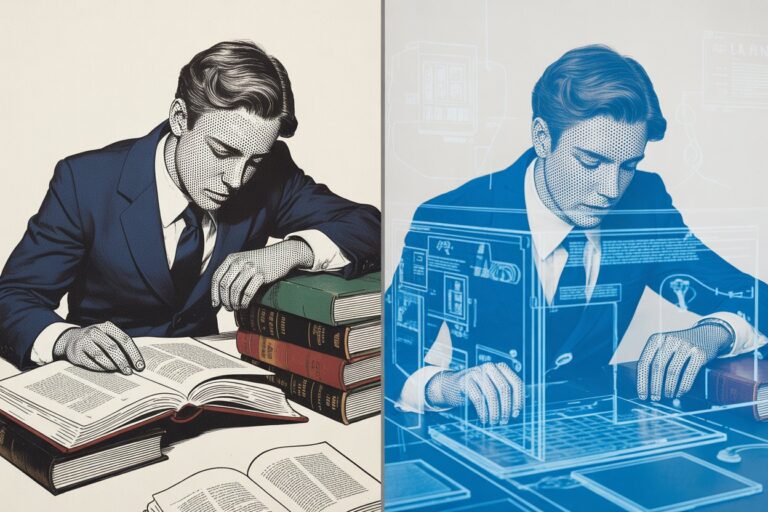

Artificial intelligence thrives on data, and no industry holds more sensitive data than law. As firms rush to automate research, draft filings, and streamline client work, a crucial question persists: are the same client files that justify legal privilege now fueling machine learning behind the scenes?

Where Privilege Becomes Training Data

Law firms increasingly deploy generative AI tools for drafting, due diligence, and document analysis. Those systems rely on exposure to legal text (filings, contracts, discovery records) to refine performance. Yet if a firm uses active or historical client materials to train or fine-tune an AI model, the boundary between knowledge management and data misuse becomes perilously thin. Confidentiality, once a moral constant, collides with the statistical logic of machine learning.

The American Bar Association’s Formal Opinion 512, issued in July 2024, underscores that lawyers must maintain competence, confidentiality, and independent judgment when using generative AI. Under Model Rule 1.6, disclosing or reusing client data for any purpose outside representation requires informed consent. That includes letting a vendor, or a firm’s internal data scientists, train a model on privileged material without client authorization.

Hidden Exposure in Third-Party AI Tools

Most firms now rely on external AI vendors for search, transcription, and drafting. Some of those platforms reserve rights to reuse uploaded text for “product improvement.” A lawyer uploading discovery materials to a public chatbot or unvetted software risks placing client confidences into a corporate training corpus. Even enterprise products can pose exposure if service terms lack explicit prohibitions on model retraining. The Federal Trade Commission has warned repeatedly that using consumer or client data to train AI without notice may constitute a deceptive practice under Section 5 of the FTC Act.

In February 2024, the FTC issued guidance warning that companies adopting more permissive data practices (such as using client data for AI training) through retroactive changes to terms of service may violate consumer protection law. Technology firms often describe such reuse as “anonymized learning,” yet machine learning models can memorize and regenerate original content. Research from Nicholas Carlini and colleagues at Google and other institutions has demonstrated that large language models can reproduce verbatim snippets from training data, undermining claims of de-identification. Anonymity, in other words, is a mathematical illusion that lawyers cannot afford to trust.

Informed Consent in the Age of Automation

Engagement letters rarely mention AI. Generic clauses authorizing “third-party technology tools” may not satisfy the duty of informed consent when the tool continuously learns from client content. The District of Columbia Bar’s Opinion 388, issued in April 2024, advises that lawyers must disclose material risks of AI use, including data sharing, and secure client approval if information leaves firm control. The opinion uses the Mata v. Avianca case as an instructive example of what can go wrong when lawyers fail to understand AI limitations. A growing number of state bars, from California to Florida, are drafting similar guidance to codify transparency in client communications.

Consent alone does not resolve risk. If the model vendor stores data overseas or fails to segregate legal materials from other corporate inputs, privilege can evaporate. The ISO/IEC 42001:2023 AI management standard, published in December 2023, outlines governance requirements for organizations developing or deploying AI, including record-keeping, audit trails, and data-use accountability. While not binding, such frameworks foreshadow what regulators may soon expect of law firms handling client information through AI pipelines.

Global Cautionary Signals

Outside the United States, regulators are moving faster. The European Union’s AI Act, which entered into force in August 2024, classifies certain AI systems used in administration of justice (including those assisting courts and tribunals in legal interpretation and application of law) as “high-risk,” mandating transparency and human oversight. The United Kingdom’s Solicitors Regulation Authority has published guidance emphasizing that adopting AI does not diminish solicitors’ duties of competence, confidentiality, and accountability. Canada’s federal privacy commissioner has opened consultations on whether law-firm AI use constitutes cross-border personal-data processing. The pattern is clear: professional secrecy remains non-negotiable, even when the algorithm promises efficiency.

Building Firewalls of Trust

Robust governance begins at home. A responsible firm policy should inventory all AI tools in use, review vendor terms, and restrict training on any material derived from client work. Legal departments are adopting “zero-training” clauses that prohibit vendors from using client data for model improvement. Larger firms are developing internal sandboxes (isolated, on-premise models trained solely on public law data) to balance innovation with compliance. The NIST AI Risk Management Framework, released in January 2023, provides a reference architecture for evaluating such systems through principles of transparency, accountability, and data minimization.

Specific tools have emerged to address these concerns. Some law firms now use specialized legal AI platforms such as Harvey AI, Casetext’s CoCounsel, and Thomson Reuters’ Westlaw Precision, which offer contractual guarantees against training on client data. Others employ services like ClearBrief that provide SOC 2 Type 2 certification and maintain strict data hygiene controls. The key is conducting thorough vendor due diligence before implementation.

Human review remains the essential safeguard. Every AI output touching client work should be verified by a lawyer who understands both the legal context and the system’s limitations. Delegating confidentiality to code replaces professional judgment with probability. Once a model learns too much, it cannot forget.

The State-Level Response

State bar associations have responded with increasing specificity. Florida Bar Ethics Opinion 24-1 requires lawyers to obtain informed client consent before using generative AI if confidential information will be disclosed. The California State Bar’s Standing Committee on Professional Responsibility published practical guidance advising that lawyers must not input confidential client information into AI systems lacking adequate security protections. New York’s State Bar Association Task Force on Artificial Intelligence released comprehensive recommendations in April 2024, emphasizing that attorneys cannot rely on AI without verification and must acknowledge mistakes made by AI systems to courts.

Kentucky Bar Association Opinion E-457 clarifies that routine AI use need not be disclosed unless clients are charged for AI-related costs or confidential information will be provided to the system. Pennsylvania and Philadelphia bar associations issued joint guidance requiring lawyers to inform clients about AI use and explain its potential impact on case objectives.

Moving Forward

As AI becomes essential infrastructure for legal practice, the question is no longer whether to adopt these tools but how to deploy them without eroding the confidentiality obligations that define the profession. Regulators are watching. Clients are increasingly sophisticated about data rights. And the first major scandal involving client data used for AI training without consent will reshape this entire conversation. Firms that act now to establish rigorous data governance will avoid becoming that cautionary tale.

Sources

- American Bar Association: Formal Opinion 512 on Generative Artificial Intelligence Tools (July 2024)

- California State Bar: Practical Guidance for the Use of Generative Artificial Intelligence in the Practice of Law (November 2023)

- Carlini, Nicholas, et al.: Quantifying Memorization Across Neural Language Models (2023)

- District of Columbia Bar: Opinion 388 on Attorneys’ Use of Generative Artificial Intelligence in Client Matters (April 2024)

- European Union: Artificial Intelligence Act (Regulation EU 2024/1689, entered into force August 2024)

- Florida Bar: Ethics Opinion 24-1 on Use of Generative Artificial Intelligence (January 2024)

- U.S. Federal Trade Commission: AI Companies: Quietly Changing Your Terms of Service Could Be Unfair or Deceptive (February 2024)

- U.S. Federal Trade Commission: AI Companies: Uphold Your Privacy and Confidentiality Commitments (January 2024)

- ISO/IEC 42001:2023: Artificial Intelligence Management System Standard (December 2023)

- Kentucky Bar Association: Ethics Opinion E-457 on Use of Artificial Intelligence (March 2024)

- National Institute of Standards and Technology: AI Risk Management Framework (January 2023)

- New York State Bar Association: Report and Recommendations of the Task Force on Artificial Intelligence (April 2024)

- United Kingdom Solicitors Regulation Authority: Risk Outlook Report on Artificial Intelligence in the Legal Market (2024)

This article was prepared for educational and informational purposes only. It does not constitute legal advice and should not be relied upon as such. All cases, regulations, and sources cited are publicly available through official regulatory bodies, court filings, and reputable sources. Readers should consult professional counsel for specific legal or compliance questions related to AI use.

See also: Beyond Human Hands: The Uncertain Copyright of AI-Drafted Law

Jon Dykstra, LL.B., MBA, is a legal AI strategist and founder of Jurvantis.ai. He is a former practicing attorney who specializes in researching and writing about AI in law and its implementation for law firms. He helps lawyers navigate the rapid evolution of artificial intelligence in legal practice through essays, tool evaluation, strategic consulting, and full-scale A-to-Z custom implementation.